Project Garden

AWS Services used:

AWS Lambda, S3, DynamoDB & Rekognition.

By Rauni Ribeiro.

8-minute read - Published 07:38 AM EST, Fri February 11, 2024

The idea behind this project is to demonstrate and maximize the use of integrated services such as S3, DynamoDB, and AWS Rekognition (Machine Learning). The focus is to show a hypothetical scenario where an industrial farm still uses spreadsheets to keep its stock inventory of big bags/fertilizers. Still, they recently got attacked by malicious attackers, which cost them a lot since sensitive information was stolen. They now need a solution to this issue and a safer environment to manage stock inventory and this is where our application comes in to help the company solve this issue:

Table of Contents:

Introduction:

-

Brief overview of the project

-

My background and AWS Cloud Practitioner certification

AWS services used:

-

S3

-

Lambda

-

DynamoDB

-

Rekognition

Code snippets:

-

Examples of code snippets used in the project

-

Explanation of each snippet and its purpose

Challenges faced:

-

Detailed description of the challenges encountered during the project development

-

How these challenges were overcome

Lessons learned:

-

Key takeaways from the project

-

How these lessons can be applied to future projects

Conclusion:

-

Recap of the project and its outcomes

-

Future plans and potential improvements

Project Overview - Big Bag

The project was worked on using Boto3 as our main Python SDK and divided into two different lambda files:

-

lambda_function1.py # calls S3 resources,

-

lambda_function2.py # calls Rekognition and DynamoDB resources.

I took the freedom to decouple the project, allowing for better scalability as well as easier maintenance. The main premise here is that function1 invokes function2 directly through our lambda.invoke( ) method and JSON Payload, without the need of AWS Step Functions. I figured it was a small project and orchestrating it would be unnecessary.

Code Snippets — lambda_function1.py

Calling AWS S3 & Lambda clients:

Lambda function to identify the last added S3 object to the bucket and uses the strftime method to format that timestamp into seconds:

Setting a default API string message to confirm whether our lambda function 1 is working:

Invoking lambda_function2 from our Lambda_function1.

Note: This is the part where I declared and passed the ‘last_added' S3 object from our Lambda_function1 to our Lambda_function 2 through our payload:

Parsing the Payload response to Lambda_function2.py:

End of part 1.

Code Snippets — lambda_function2.py

Declaring AWS Rekognition, DynamoDB, image_path (for the object) and accessing S3_last_added_object from function1:

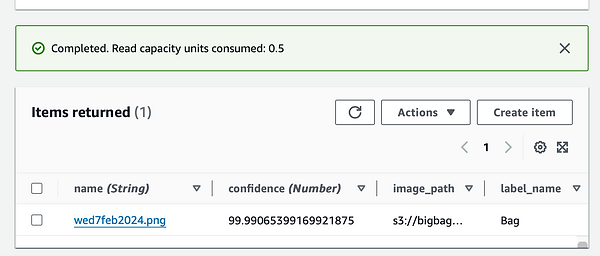

Adding an if statement to our S3_last_added_obj. If rekognition_name == s3_last_added_obj and if the confidence level >= 95% or higher, then add and save the item to our DynamoDB table:

Adding obtained vars from our response_rekognition iteration to our responseBody so we can output the values obtained and confirm success:

CloudWatch logs:

And the final Output:

Note: DynamoDB will effectively store the s3 object name, confidence level of AWS Rekognition, object path, and label name obtained by our Rekognition response.

End of part 2.

Challenges faced & lessons learned:

-

Training Rekognition datasets (machine learning):

I had to learn more about machine learning, download sets of big bag images, and manually label them so I could help train our machine-learning solution to efficiently determine whether the object in the picture is, indeed, a big bag;

2. Keeping it safe:

Manually selecting IAM roles cost me a significant amount of time, considering the quantity of integrated AWS services used and the implications of having to parse data between them to complete tasks while following the least privilege principle of AWS;

3. Cloud Optimization:

I spent a beneficial amount of time optimizing my solution for better performance, reliability, and cost-effectiveness of workloads. I even went as far as completely rewriting the second function and removing unnecessary codes that were wasteful of resources and redundant, allowing for better and clearer codes;

4. Learning more about the structure of JSON objects and DynamoDB tables:

Learning more about JSON object structure provided me with a new fresh perspective on easily structuring, manipulating, and transferring data to other relevant systems and components;

5. Designing the project:

Initially, this was a monolith project, but I realized that future developments would be impossible if kept this way, so I rearranged and decoupled the project for better scalability, agility, and easier maintenance. I also made sure to avoid unnecessary codes and even more AWS integrated services to better streamline access and provide simple, efficient solutions for all sorts of use cases.

Conclusion:

Project Review:

As we now see, an employee can take a picture of a big bag and upload it directly to our S3 bucket every time a new item needs to be added to our stock inventory, which will securely be stored in its AWS-integrated system (DynamoDB table). Using S3 will guarantee file security, considering it has several layers of protection against ransomware and malicious attackers.

Future plans and possible improvements:

-

Creating a secure web application to facilitate the insertion of data is certainly a great idea since it would provide a friendly interface and make it easy for workers to securely upload files to our S3 bucket.

-

Adding a feature to count the amount of bags in a picture would not only reduce the workload but also facilitate the worker`s life since only one single photo would be needed at a time to capture the total amount of bags.

-

Amazon EventBridge: Instead of using S3 event notifications to trigger the Lambda functions, Amazon EventBridge could be used to create a more scalable and decoupled event-driven architecture. This can help in managing events from S3 and invoking the Lambda functions.

End.

Rauni Ribeiro / Cloud Engineer