Project

Nimbus

Services used:

API Gateway, Terraform, DynamoDB, IAM Lambda & Boto3.

Extra tools:

Git & Github

By Rauni Ribeiro.

10-minute read - Published 9:52 PM EST, Sun July 27th, 2025

This project is intended to showcase the utilization of a RESTful API, designed for robustness and scalability. With API Gateway as the entry point and AWS Lambda as a serverless backend, data processing is efficient and fast. Using DynamoDB as aSQL database, the infrastructure is provisioned with Terraform, following the Infrastructure as Code (IaC) standard. The app features API Key authentication and is set up for smooth communication between the front and backend, with the end goal of providing a secure authentication system for our login system.

Table of Contents:

Introduction:

-

Brief overview of the project

AWS services used:

-

API Gateway

-

Terraform

-

IAM

-

Lambda

-

DynamoDb

-

Boto3

Code snippets:

-

Examples of code snippets used in the project

-

Explanation of each snippet and its purpose

Challenges faced:

-

Detailed description of the challenges encountered during the project development

-

How these challenges were overcome

Lessons learned:

-

Key takeaways from the project

-

How these lessons can be applied to future projects

Conclusion:

-

Recap of the project and its outcomes

-

Future plans and potential improvements

Project Overview - Terraform

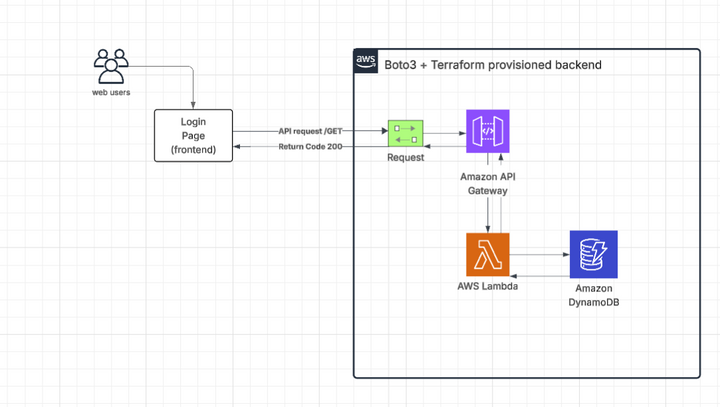

This project demonstrates a serverless backend infrastructure provisioned entirely with Terraform, integrating key AWS services to support a lightweight login system.

The architecture is composed of the following components:

-

Login Page (Frontend): A static frontend that allows users to initiate a login request via a GET HTTP call.

-

Amazon API Gateway: Serves as the secure and scalable public entry point, receiving HTTP requests from the frontend.

-

AWS Lambda (Python + Boto3): Executes business logic upon API calls, processes the request, and interacts with DynamoDB to validate or retrieve user data.

-

Amazon DynamoDB: Stores user credentials or metadata in a structured NoSQL format, designed for high availability and low-latency queries.

-

Terraform: Automates the provisioning of all infrastructure components, ensuring consistent deployments and enabling version control of infrastructure as code.

The application flow is as follows:

-

A web user accesses the login page.

-

Upon action, a GET request is sent to the API Gateway endpoint.

-

API Gateway invokes the Lambda function.

-

The Lambda function queries DynamoDB using the Boto3 SDK.

-

A 200 OK response is returned to the frontend upon successful execution through API Gateway.

All infrastructure was modularized and provisioned using Terraform, following best practices for clarity and scalability. The backend is entirely decoupled from the frontend, allowing for independent evolution and integration into larger systems or CI/CD workflows in the future.

This project reflects best practices in serverless design, IaC (Infrastructure as Code), and event-driven architecturesusing core AWS services.

Terraform + Python Code Snippets (and urls) — /bootstrap & /frotend

Code Stack Summary:

Defining the Github Actions Workflow (Terraform.yml):

Defining our infrastructure with Terraform's main.tf:

Defining our Lambda's function with Boto3 (AWS python SDK):

End of part 1.

Defining our index.html (our login page source code):

🔧 AWS API Gateway – Project Setup Summary

1. API Type

-

REST API (not HTTP API)

-

We chose REST API for its flexibility and full control over integrations, which is ideal when working with custom Lambda functions.

2. Resource Creation

-

Created a resource called /login to serve as the main public endpoint.

-

This is the route where the HTML form sends user credentials (username and password).

3. HTTP Method

-

Only the POST method was implemented.

-

It receives a JSON payload from the frontend login form.

-

We used POST because it’s the standard method for sending sensitive data like credentials to the backend for processing.

4. Lambda Integration

-

The POST method is integrated with a custom AWS Lambda function (handler.py).

-

Integration type: Lambda Proxy Integration – allowing the Lambda to access the entire request payload.

5. Enable CORS

-

CORS was enabled manually on the POST method:

-

CORS headers were added both in the method configuration and in the Lambda response:

"headers": { "Access-Control-Allow-Origin": "*", "Access-Control-Allow-Headers": "*" }

-

This is required because the headers need to be passed from the Lambda response back to API Gateway with the CORS headers included, otherwise CORS won't work and the request may be blocked by the browser.

-

6. Deployment Stage

-

Created a deployment stage named /dev.

-

This exposes the endpoint publicly as:

https://<api-id>.execute-api.

🔧 HTML / GitHub Pages – Project Setup Summary

1. Why was GitHub Pages used in the first place?

-

Well, I'm aware that I could host it on different platforms such as Amplify, perhaps even natively through an S3 bucket or even AWS Elastic Beanstalk, but I wanted to simplify the hosting process since it is not the main focus of this project.

2. How do we configure GitHub Actions, then?

-

It is fairly simple and intuitive, all that is needed is to access your Repo > Go to Settings > Choose 'Pages' on the left side panel > Choose 'GitHub Actions' for your deployment source and it is done!

Note: be aware that the project must be public so that GitHub Pages can work!

End of part 3.

Challenges faced & lessons learned:

-

Managing existing resources:

One key challenge that I encountered was how difficult it is to manage already existing resources, the idea of the project was to never use the command 'terraform destroy -auto-approve' or anything similar since I wanted to keep the experience as real as it can be (as in an actual working environment), we don't want a coworker that is recklessly destroying prod resources without a valid reason and so, I worked with import commands in our Github Workflow to guarantee that this wouldn't happen;

2. Keeping it safe:

I used the POST method to submit sensitive data (username and password) to our Lambda function. I avoided using GET because I know for a fact that it is often considered less secure, specially when sensitive could be accidentally end up being exposed in the URL. I've also made sure to set up proper role permissions for our Lambda function, allowing it to work in the data retrieval process, essentially mimicking the behavior of a 'GET' request;

3. Terraform:

Configuring new API Gateway resources in our main.tf was specifically challenging because it requires the creation of multiple resources to properly configure our API Gateway. I had to read through the documentation multiple times to really implement those efficiently. I tried avoiding any repeating code;

4. Index.html (our Login Page):

I personally don't have much experience with HTML or Javascript and since the main focus of this project was not to develop a fancy website from the ground up, I made sure to get index.html samples from the internet and tweaked it where I found necessary. For the script, I found a couple of references and basically changed the logic behind it.

End of part 4.

Conclusion:

Project Review:

Throughout the course of this project, I encountered various challenges that tested my problem-solving skills and expanded my knowledge in cloud infrastructure and deployment processes. From troubleshooting issues to ensuring security and mastering new tools, each obstacle presented an opportunity for growth and learning.

Future plans and possible improvements:

-

Adding more methods, allowing our website to web app to perform more actions;

-

Implementing user authentication tokens (JWT);

-

Separating environments (dev/staging/prod)

-

Integrating CloudWatch monitoring and alerts

-

Adding CSS to our index.html file is a good idea to create a better interface for end users;

-

The addition of a load balancer is also a good idea since it guarantees that resource servers are used equally and distributes network traffic dynamically.

End.

Rauni Ribeiro / Cloud Engineer