Project Terraform

Services used:

Terraform, S3, IAM, EC2 & AWS CodePipeline.

Extra tools:

Git & Github

By Rauni Ribeiro.

6-minute read - Published 08:43 PM EST, Thu April 1th, 2024

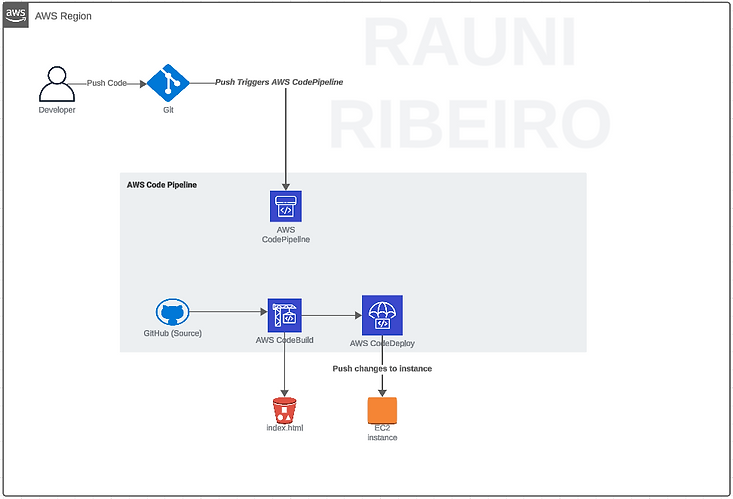

This project is intended to showcase the utilization of

Terraform and AWS CodePipeline with EC2 to host a webserver using a mock sample stored in an S3 bucket directory;

The idea is that this pipeline will help automate the webserver creation with its continuous delivery service.

Table of Contents:

Introduction:

-

Brief overview of the project

AWS services used:

-

Terraform

-

S3

-

IAM

-

EC2

-

AWS CodePipeline

Code snippets:

-

Examples of code snippets used in the project

-

Explanation of each snippet and its purpose

Challenges faced:

-

Detailed description of the challenges encountered during the project development

-

How these challenges were overcome

Lessons learned:

-

Key takeaways from the project

-

How these lessons can be applied to future projects

Conclusion:

-

Recap of the project and its outcomes

-

Future plans and potential improvements

Project Overview - Terraform

The project primarily utilized Terraform and Shell Script. The concept involves enabling developers to push changes to the GIT repository, triggering the AWS Code Pipeline to update the EC2 instance and facilitate continuous delivery of our web server.

These are the dependencies used to run the web server:

-

httpd (Apache HTTP Server)

-

Terraform

-

SSM Agent (AWS Systems Manager for CodeDeploy)

This project was decoupled in the following manner:

1- Main.tf - contains all Terraform Resources

2- Variables.tf - Contains all of the project's variables

3- All Shell scripts are kept in the /shell-scripts folder.

Terraform Code Snippets — main.tf

Defining the Terraform Provider and its region:

Creating an IAM role to authenticate Environment Variables (for our ec2 instance) and policy attachment for our IAM role (ec2_role)

Creating an IAM instance profile to associate with the EC2 instance:

Configuring our EC2 instance:

Defining our TFstate file directory:

Creating a security group:

Creating a VPC (Virtual Private Cloud):

Creating an Internet Gateway:

Creating a Route Table and associating it with our Subnet:

Creating a Launch Template:

End of part 1.

Terraform Code Snippets — variables.tf

Note: Please notice that the ami_id (Amazon Machine Image) might get discontinued over time and you might need to replace it with the most recent version of Linux. The same is applied to ARNs, bash commands, and AWS region names!

These are the variables used in our project:

Notice that the variable user_data_webserver_script contains the install commands of all dependencies required in our EC2 instance and that each command line is broken by a newline ( \n).

End of part 2.

Shell Code Snippets — /shell-scripts

aws-credentials.sh

SSM_agent_dependencies.sh

terraform-apply.sh

aws-credentials.sh

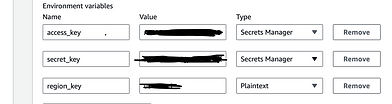

This Shell script allows us to authenticate our EC2 instance session by simply declaring our environment variables defined in AWS CodeBuild settings:

SSM_agent_dependencies.sh

This Shell script installs all dependencies required for our SSM agent to run and to manage all of the EC2 resources :

terraform-apply.sh

This Shell script clones our GitHub repository, navigates to our directory and applies our Terraform configurations :

End of part 3.

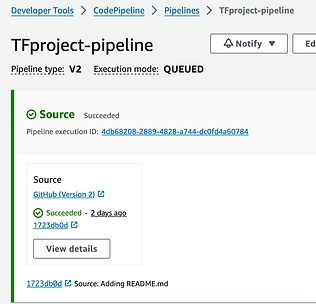

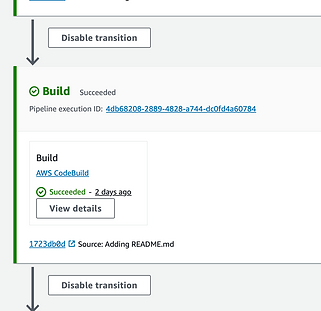

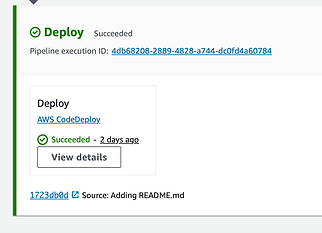

AWS CodePipeline - Configurations

Source:

Github

Authentication Method:

ssh key

It is crucial to set up the environment variables accordingly:

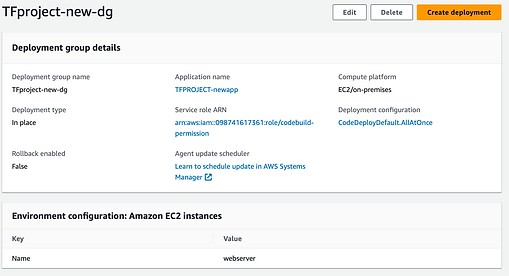

It is also important to configure AWS CodeDeploy in the following way:

Note: you should be able to use the default Service Role ARN to deploy this application.

End of part 4.

Challenges faced & lessons learned:

-

Dealing with troubleshooting issues:

I stumbled upon an error that prevented me from authenticating my EC2 instances when using shell scripts through buildspec.yml. I later found that I could parse those same shell commands through Terraform's user_data and that allowed the deployment to work;

2. Keeping it safe:

Selecting specific IAM roles for this project was also a great challenge since most of the integrated services specifically required certain roles in order to execute, so I had the opportunity to create custom roles that facilitated this process;

3. Terraform:

Configuring the .tfstate file, parsing shell commands through user_data, and creating custom ec2_policy_attachments gave me fresh and in-depth knowledge that certainly proved to be valuable to this project and will most likely also be valuable for future endeavors;

4. Code Pipeline:

Despite not having prior experience with pipelines, learning more about how to properly configure it felt satisfying and exciting at the same time. With that considered, I gained enough experience to understand how AWS Code Pipeline works and I'm sure this will also be useful in future tasks.

End of part 5.

Conclusion:

Project Review:

Throughout the course of this project, I encountered various challenges that tested my problem-solving skills and expanded my knowledge in cloud infrastructure and deployment processes. From troubleshooting issues to ensuring security and mastering new tools, each obstacle presented an opportunity for growth and learning.

Future plans and possible improvements:

-

Adding CSS to our index.html file is a good idea to create a better interface for end users;

-

Adding AWS route 53 to our web server facilitates the access of end-users since it is a DNS solution that provides a website domain, allowing users to find your website;

-

The addition of a load balancer is also a good idea since it guarantees that resource servers are used equally and distributes network traffic dynamically.

End.

Rauni Ribeiro / Cloud Engineer